Data Wrangling

Make sure you have the tidyverse installed, and the SAFI data set

downloaded to your data folder by running the code from the Set-up section

Basic Data Manipulation

library(tidyverse)

library(here)

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

filter(village == "Chirodzo") %>%

mutate(people_per_room = no_membrs / rooms,

years_liv = if_else(years_liv > 90, NA, years_liv),

respondent_wall_type = as_factor(respondent_wall_type),

respondent_wall_type = fct_recode(respondent_wall_type,

"Burned bricks" = "burntbricks",

"Mud Daub" = "muddaub",

"Sun bricks" = "sunbricks"),

conflict_yn = case_when(affect_conflicts == "frequently" ~ 1,

affect_conflicts == "more_once" ~ 1,

affect_conflicts == "once" ~ 1,

affect_conflicts == "never" ~ 0,

.default = NA),

day = day(interview_date),

month = month(interview_date),

year = year(interview_date)) %>%

select(key_ID:rooms, day:people_per_room, -village) %>%

filter(interview_date > "2016-11-16" & interview_date < '2017-01-01')## # A tibble: 10 × 9

## key_ID interview_date no_membrs years_liv respondent_wall_type rooms

## <dbl> <dttm> <dbl> <dbl> <fct> <dbl>

## 1 10 2016-12-16 00:00:00 12 23 Burned bricks 5

## 2 34 2016-11-17 00:00:00 8 18 Burned bricks 3

## 3 35 2016-11-17 00:00:00 5 45 Mud Daub 1

## 4 36 2016-11-17 00:00:00 6 23 Sun bricks 1

## 5 37 2016-11-17 00:00:00 3 8 Burned bricks 1

## 6 43 2016-11-17 00:00:00 7 29 Mud Daub 1

## 7 44 2016-11-17 00:00:00 2 6 Mud Daub 1

## 8 45 2016-11-17 00:00:00 9 7 Mud Daub 1

## 9 46 2016-11-17 00:00:00 10 42 Burned bricks 2

## 10 47 2016-11-17 00:00:00 2 2 Mud Daub 1

## # ℹ 3 more variables: day <int>, conflict_yn <dbl>, people_per_room <dbl>Pivoting (or reshaping)

In tidyverse, reshaping is called pivoting. Here’s how you pivot a household roster (reshape wider) so you can merge it with the household data.

Creating fake data

First, I create sa fake household roster, based on the SAFI data,

making sure that the household roster has a number of lines

for each household that is equal to the household size, and has two

randomly generated variables: female and age. Note that age may

be -99, which should be considered missing.

long_data <-

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

select(key_ID,no_membrs ) %>%

uncount(no_membrs) %>%

group_by(key_ID) %>%

mutate(member_ID = row_number()) %>%

rowwise() %>%

mutate(female = sample(0:1,1),

age = case_when(member_ID == 1 ~ sample(18:86,1),

.default = sample(c(0:86,-99),1))) %>%

ungroup()

long_data## # A tibble: 942 × 4

## key_ID member_ID female age

## <dbl> <int> <int> <dbl>

## 1 1 1 1 71

## 2 1 2 0 68

## 3 1 3 1 31

## 4 2 1 1 52

## 5 2 2 1 80

## 6 2 3 1 19

## 7 2 4 0 4

## 8 2 5 1 72

## 9 2 6 1 15

## 10 2 7 1 65

## # ℹ 932 more rowsPivoting long to wide

To merge this into our main data set, we need to make sure we go back to having 1 observation per household. We will do this by using pivot_wider():

wide_data <-

long_data %>%

pivot_wider(names_from = member_ID,

values_from = !ends_with("_ID")) %>%

relocate(key_ID, ends_with(paste0("_",1:19)))

wide_data ## # A tibble: 131 × 39

## key_ID female_1 age_1 female_2 age_2 female_3 age_3 female_4 age_4 female_5

## <dbl> <int> <dbl> <int> <dbl> <int> <dbl> <int> <dbl> <int>

## 1 1 1 71 0 68 1 31 NA NA NA

## 2 2 1 52 1 80 1 19 0 4 1

## 3 3 0 38 0 53 0 86 1 30 1

## 4 4 1 85 1 68 0 22 0 84 1

## 5 5 0 55 1 42 1 74 1 77 1

## 6 6 1 21 0 19 0 27 NA NA NA

## 7 7 0 51 0 81 0 79 1 21 1

## 8 8 1 60 1 17 1 9 1 64 0

## 9 9 1 31 1 34 0 53 0 48 1

## 10 10 1 58 1 1 1 61 0 56 1

## # ℹ 121 more rows

## # ℹ 29 more variables: age_5 <dbl>, female_6 <int>, age_6 <dbl>,

## # female_7 <int>, age_7 <dbl>, female_8 <int>, age_8 <dbl>, female_9 <int>,

## # age_9 <dbl>, female_10 <int>, age_10 <dbl>, female_11 <int>, age_11 <dbl>,

## # female_12 <int>, age_12 <dbl>, female_13 <int>, age_13 <dbl>,

## # female_14 <int>, age_14 <dbl>, female_15 <int>, age_15 <dbl>,

## # female_16 <int>, age_16 <dbl>, female_17 <int>, age_17 <dbl>, …We only needed to specify two options:

- names_from: this is the column that contains the names (or usually numbers)

for each of our units of analysis. In this case, the

member_ID. - values_from: the variables containing the data. All variables you specify

here, will get one column for each possible value of names_from.

In our case, these variables

femaleandage. I used tidy select syntax to specify all variables except the ones ending in_ID. - I use

relocate()to reorder the variables.

Pivoting wide to long

If we had started with wide data, and had wanted to transform to

long data, we’d have to use pivot_longer():

## # A tibble: 4,978 × 3

## key_ID name value

## <dbl> <chr> <dbl>

## 1 1 female_1 1

## 2 1 age_1 71

## 3 1 female_2 0

## 4 1 age_2 68

## 5 1 female_3 1

## 6 1 age_3 31

## 7 1 female_4 NA

## 8 1 age_4 NA

## 9 1 female_5 NA

## 10 1 age_5 NA

## # ℹ 4,968 more rowsThis was easy since the syntax of pivot_longer() is the exact opposite of

pivot_wider(), but the result is pretty useless:

- The

namecolumn contains two things: a variable name and amember_ID; - The data is too long: I’d like

ageandfemaleto be two separate variables ; and - There’s many empty rows: there’s and age and female row for 19 possible members for each household, but most households are smaller than that.

I could use separate_wider_delim(),

pivot_wider(), and filter(!is.na()) to address those, but that’s not elegant

at all.

I can do all of this within the pivot_longer() call by using the names_to

and names_sep options:

wide_data %>%

pivot_longer(!key_ID,

names_to = c(".value", "member_ID"),

names_sep="_",

values_drop_na = TRUE,

names_transform = list(member_ID = as.integer))## # A tibble: 942 × 4

## key_ID member_ID female age

## <dbl> <int> <int> <dbl>

## 1 1 1 1 71

## 2 1 2 0 68

## 3 1 3 1 31

## 4 2 1 1 52

## 5 2 2 1 80

## 6 2 3 1 19

## 7 2 4 0 4

## 8 2 5 1 72

## 9 2 6 1 15

## 10 2 7 1 65

## # ℹ 932 more rowsIn this case, the syntax is a bit harder to understand. It’s good to think first

what the original data looks like, and how I intend to transform it.

The wide data has columns key_ID, age_1-19 and female_1-19.

I don’t really want to touch the key_ID column.

I want to turn the columns age_1-19 and female_1-19 into three columns:

female, age and member_ID.

This translates to the options we passed to pivot_longer() as follows:

!key_ID: We want to pivot the data that’s in all columns except key_ID.names_to = c(".value", "member_ID"): this specifies the new columns we want to create. It basically says that the existing column names consist of two parts: one part (i.e. female and age) that we wish to keep as column names of variables that will contain my values, and one part (i.e. the numbers 1-19) which should be put into a new column which we will “member_ID”.names_sep=: this indicates how the two parts mentioned above are separated. In more difficult cases, you’ll have to use thenames_patternoption. This requires some knowledge of regular expressions, so here’s two examples:- If there is no seperator (

age1,female1etc…):names_pattern = "(.*\\D)([0-9]+)$". In this regular expression,.*\\Dmatches a string of any length, of any characters, as long as it ends with something other than a digit. The[0-9]+$matches any number of digits at the end of the string. The parentheses indicate how the string should be separated to form variable names and member_ID. - If the separator is used in other places in variable names (

member_age_1etc…):names_pattern = "(.*)_([0-9]+)$".

- If there is no seperator (

values_drop_na = TRUE: tells R to drop rows that have missing data for all variables. This prevents the issue where we hadd too many rows.names_transform: by default, allnamecolumns will be character types, butmember_IDonly contains integers, so we transform it to integer. This is completely optional.

Joining (or merging) data

Tidyverse has four functions to join (or merge, as Stata calls it) two

data sets. The functions that differ in the way they treat observations that are in one data set but not the other.

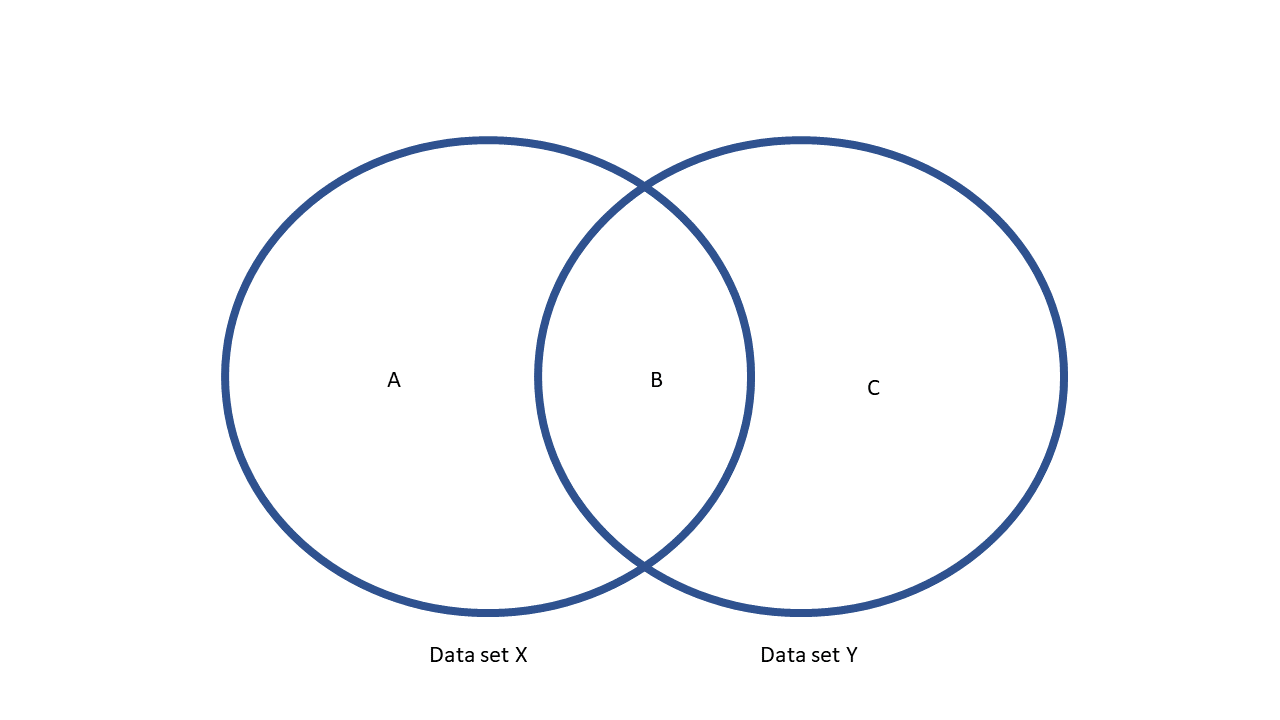

Consider the diagram below.

It has two data sets, x (in Stata terms, this is the master data set) and y (the using

data set in Stata terms). They have overlapping rows (area B), but also

rows that are only in x (area A) or only in y (area C).

The four join functions work as follows:

inner_join(x,y)will only keep area B.left_join(x,y)will keep areas A and B.right_join(x,y)will keep areas B and C.full_join(x,y)will keep areas A, B, and C.

In our case, the data sets match perfectly, i.e. we only have an area B, so there is no practical difference. I chose left_join() so the number of

observations in my household survey is guaranteed to remain the same.

To merge the roster to the household data, we use the join_by function:

## Rows: 131 Columns: 14

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (7): village, respondent_wall_type, memb_assoc, affect_conflicts, items...

## dbl (6): key_ID, no_membrs, years_liv, rooms, liv_count, no_meals

## dttm (1): interview_date

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

## Joining with `by = join_by(key_ID)`## # A tibble: 131 × 52

## key_ID village interview_date no_membrs years_liv respondent_wall_type

## <dbl> <chr> <dttm> <dbl> <dbl> <chr>

## 1 1 God 2016-11-17 00:00:00 3 4 muddaub

## 2 2 God 2016-11-17 00:00:00 7 9 muddaub

## 3 3 God 2016-11-17 00:00:00 10 15 burntbricks

## 4 4 God 2016-11-17 00:00:00 7 6 burntbricks

## 5 5 God 2016-11-17 00:00:00 7 40 burntbricks

## 6 6 God 2016-11-17 00:00:00 3 3 muddaub

## 7 7 God 2016-11-17 00:00:00 6 38 muddaub

## 8 8 Chirodzo 2016-11-16 00:00:00 12 70 burntbricks

## 9 9 Chirodzo 2016-11-16 00:00:00 8 6 burntbricks

## 10 10 Chirodzo 2016-12-16 00:00:00 12 23 burntbricks

## # ℹ 121 more rows

## # ℹ 46 more variables: rooms <dbl>, memb_assoc <chr>, affect_conflicts <chr>,

## # liv_count <dbl>, items_owned <chr>, no_meals <dbl>, months_lack_food <chr>,

## # instanceID <chr>, female_1 <int>, age_1 <dbl>, female_2 <int>, age_2 <dbl>,

## # female_3 <int>, age_3 <dbl>, female_4 <int>, age_4 <dbl>, female_5 <int>,

## # age_5 <dbl>, female_6 <int>, age_6 <dbl>, female_7 <int>, age_7 <dbl>,

## # female_8 <int>, age_8 <dbl>, female_9 <int>, age_9 <dbl>, …Note that we didn’t specify identifiers, like we would in Stata. R

assumed that the variables that appear in both data frames are the

identifiers, in this case key_ID. Use the by option to change this.

Going the other way around, joining the household data to the roster data, is equally easy:

long_data %>%

left_join(

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

select(key_ID,village,interview_date))## Rows: 131 Columns: 14

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (7): village, respondent_wall_type, memb_assoc, affect_conflicts, items...

## dbl (6): key_ID, no_membrs, years_liv, rooms, liv_count, no_meals

## dttm (1): interview_date

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

## Joining with `by = join_by(key_ID)`## # A tibble: 942 × 6

## key_ID member_ID female age village interview_date

## <dbl> <int> <int> <dbl> <chr> <dttm>

## 1 1 1 1 71 God 2016-11-17 00:00:00

## 2 1 2 0 68 God 2016-11-17 00:00:00

## 3 1 3 1 31 God 2016-11-17 00:00:00

## 4 2 1 1 52 God 2016-11-17 00:00:00

## 5 2 2 1 80 God 2016-11-17 00:00:00

## 6 2 3 1 19 God 2016-11-17 00:00:00

## 7 2 4 0 4 God 2016-11-17 00:00:00

## 8 2 5 1 72 God 2016-11-17 00:00:00

## 9 2 6 1 15 God 2016-11-17 00:00:00

## 10 2 7 1 65 God 2016-11-17 00:00:00

## # ℹ 932 more rowsNote that here I only merged in two variables, by using select and a pipe within the left_join() function.

Summarizing over groups (or collapsing data)

To compute summary statistics (sums, counts, means etc.) over a group,

we use the group_by() and summarize() functions. For example, to

compute the household size, number of women and average age in each household.

But before doing anything, I make sure the -99s in the age variable are treated

as missing, using a simple mutate() to conver them to NA.

long_data %>%

group_by(key_ID) %>%

mutate(age = if_else(age == -99,NA,age)) %>%

summarize(hh_size = n(), num_women = sum(female), mean_age = mean(age, na.rm = TRUE))## # A tibble: 131 × 4

## key_ID hh_size num_women mean_age

## <dbl> <int> <int> <dbl>

## 1 1 3 2 56.7

## 2 2 7 6 43.9

## 3 3 10 4 52.6

## 4 4 7 5 51.1

## 5 5 7 6 52.9

## 6 6 3 1 22.3

## 7 7 6 3 49.3

## 8 8 12 6 37

## 9 9 8 4 35.4

## 10 10 12 10 43.3

## # ℹ 121 more rowsRow-wise Operations

Suppose we wanted to run an operation over multiple variables. For example to

get the household size, number of women and average age from our wide data.

The easiest, and probably best, way to do this in R is by reshaping to long,

and then use summarize, like we did above. But in Stata you would probably use some sort of

egen function, so that may come natural.

You can do similar things in R. It’s just a bit more complex than in Stata.

across(): doing the same operations on multiple variables using across

First, we need to make sure we update -99 to NA in all age_ variables.

I use the across() function, which takes two arguments: a column specifcation

(for which I use tidy select

syntax), and a function: basically, the syntax is the same as in the mutate()

step above, but with a tilde (~) in front of ifelse() and .x instead of the variable name.

## # A tibble: 131 × 39

## key_ID female_1 age_1 female_2 age_2 female_3 age_3 female_4 age_4 female_5

## <dbl> <int> <dbl> <int> <dbl> <int> <dbl> <int> <dbl> <int>

## 1 1 1 71 0 68 1 31 NA NA NA

## 2 2 1 52 1 80 1 19 0 4 1

## 3 3 0 38 0 53 0 86 1 30 1

## 4 4 1 85 1 68 0 22 0 84 1

## 5 5 0 55 1 42 1 74 1 77 1

## 6 6 1 21 0 19 0 27 NA NA NA

## 7 7 0 51 0 81 0 79 1 21 1

## 8 8 1 60 1 17 1 9 1 64 0

## 9 9 1 31 1 34 0 53 0 48 1

## 10 10 1 58 1 1 1 61 0 56 1

## # ℹ 121 more rows

## # ℹ 29 more variables: age_5 <dbl>, female_6 <int>, age_6 <dbl>,

## # female_7 <int>, age_7 <dbl>, female_8 <int>, age_8 <dbl>, female_9 <int>,

## # age_9 <dbl>, female_10 <int>, age_10 <dbl>, female_11 <int>, age_11 <dbl>,

## # female_12 <int>, age_12 <dbl>, female_13 <int>, age_13 <dbl>,

## # female_14 <int>, age_14 <dbl>, female_15 <int>, age_15 <dbl>,

## # female_16 <int>, age_16 <dbl>, female_17 <int>, age_17 <dbl>, …Notes:

- You can use

across(.cols = where(is.numeric), .fn = ...)to apply a function to all numeric variables. - You can also combine

across()withsummarize()to summarize multiple variables more easily. See the section on faceting for an example.

rowSums() and rowMeans(): fast row-wise sums and means

To compute means and sums across rows, use rowSums() and rowMeans():

wide_data %>%

mutate(across(.cols = starts_with("age_"),

.fn = ~if_else(.x == -99,NA,.x))) %>%

mutate(mean_age = rowMeans(across(starts_with("age_")),

na.rm=TRUE),

num_women = rowSums(across(starts_with("female_")),

na.rm=TRUE),

hh_size = rowSums(!is.na(across(starts_with("female_"))))) %>%

select(key_ID,hh_size,num_women, mean_age) %>%

ungroup()## # A tibble: 131 × 4

## key_ID hh_size num_women mean_age

## <dbl> <dbl> <dbl> <dbl>

## 1 1 3 2 56.7

## 2 2 7 6 43.9

## 3 3 10 4 52.6

## 4 4 7 5 51.1

## 5 5 7 6 52.9

## 6 6 3 1 22.3

## 7 7 6 3 49.3

## 8 8 12 6 37

## 9 9 8 4 35.4

## 10 10 12 10 43.3

## # ℹ 121 more rowsc_across(): passing values from multiple variables to summarizing functions

If you want to compute something other than means or sums, you can use c_across()

to get a bunch of variables into any function you want:

wide_data %>%

mutate(across(.cols = starts_with("age_"),

.fn = ~if_else(.x == -99,NA,.x))) %>%

rowwise() %>%

mutate(max_age = max(c_across(starts_with("age_")),

na.rm=TRUE),

sd_age = sd(c_across(starts_with("age_")),

na.rm=TRUE)) %>%

select(key_ID,max_age,sd_age) %>%

ungroup()## # A tibble: 131 × 3

## key_ID max_age sd_age

## <dbl> <dbl> <dbl>

## 1 1 71 22.3

## 2 2 80 30.7

## 3 3 86 22.3

## 4 4 85 33.9

## 5 5 77 20.3

## 6 6 27 4.16

## 7 7 81 27.1

## 8 8 72 23.2

## 9 9 55 20.4

## 10 10 72 21.8

## # ℹ 121 more rowsThe key trick here is the combination of rowwise() and c_across().

rowwise() ensures all summaries are computed per row, and c_across()

allows you to use tidy select syntax within any function.

Do note that while this is very flexible, it can be EXTREMLY slow. If you have a large dataset, it’s probably faster to pivot the data to long first.

Splitting multi-response variable into dummies

The SAFI data contains a number of columns that contain all responses selected

in a multiple response questions. For example, the variables items_owned can

contain something like "bicycle;television;solar_panel;table". We want to

split this into dummies: one for each possible answers. There’s a number of

ways to do this, but the most convenient is using sepate_longer()

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

separate_longer_delim(items_owned, delim = ";") %>%

mutate(value = 1) %>%

pivot_wider(names_from = items_owned,

values_from = value,

names_glue = "owns_{items_owned}",

values_fill = 0) %>%

left_join(

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

select(key_ID,items_owned)) %>%

select(items_owned, starts_with("owns_")

) %>%

head()## # A tibble: 6 × 19

## items_owned owns_bicycle owns_television owns_solar_panel owns_table

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 bicycle;television;s… 1 1 1 1

## 2 cow_cart;bicycle;rad… 1 0 1 1

## 3 solar_torch 0 0 0 0

## 4 bicycle;radio;cow_pl… 1 0 1 0

## 5 motorcyle;radio;cow_… 0 0 0 0

## 6 <NA> 0 0 0 0

## # ℹ 14 more variables: owns_cow_cart <dbl>, owns_radio <dbl>,

## # owns_cow_plough <dbl>, owns_solar_torch <dbl>, owns_mobile_phone <dbl>,

## # owns_motorcyle <dbl>, owns_NA <dbl>, owns_fridge <dbl>,

## # owns_electricity <dbl>, owns_sofa_set <dbl>, owns_lorry <dbl>,

## # owns_sterio <dbl>, owns_computer <dbl>, owns_car <dbl>Note that the original items_owned variable is lost during the separate_longer_delim()

step, so I used left_join() to merge it back in for demonstration purposes.

If you need to do this to many columns (wich is likely), it is worth creating a function:

create_dummies <- function(df,stringvar,prefix="",delim = ";") {

#Function that generates dummies from a string variable

#containing multi-response answers

#returns dataset without original var, but with dummies.

df %>%

separate_longer_delim({{ stringvar }}, delim = delim) %>%

mutate(value = 1) %>%

pivot_wider(names_from = {{stringvar}},

values_from = value,

names_prefix = prefix,

values_fill = 0)

}

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

mutate(items_owned = ifelse(is.na(items_owned),"None",items_owned)) %>%

create_dummies(stringvar = items_owned, prefix = "owns_") %>%

create_dummies(months_lack_food,"lack_food_")## Rows: 131 Columns: 14

## ── Column specification ────────────────────────────────────────────────────────

## Delimiter: ","

## chr (7): village, respondent_wall_type, memb_assoc, affect_conflicts, items...

## dbl (6): key_ID, no_membrs, years_liv, rooms, liv_count, no_meals

## dttm (1): interview_date

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.## # A tibble: 131 × 43

## key_ID village interview_date no_membrs years_liv respondent_wall_type

## <dbl> <chr> <dttm> <dbl> <dbl> <chr>

## 1 1 God 2016-11-17 00:00:00 3 4 muddaub

## 2 2 God 2016-11-17 00:00:00 7 9 muddaub

## 3 3 God 2016-11-17 00:00:00 10 15 burntbricks

## 4 4 God 2016-11-17 00:00:00 7 6 burntbricks

## 5 5 God 2016-11-17 00:00:00 7 40 burntbricks

## 6 6 God 2016-11-17 00:00:00 3 3 muddaub

## 7 7 God 2016-11-17 00:00:00 6 38 muddaub

## 8 8 Chirodzo 2016-11-16 00:00:00 12 70 burntbricks

## 9 9 Chirodzo 2016-11-16 00:00:00 8 6 burntbricks

## 10 10 Chirodzo 2016-12-16 00:00:00 12 23 burntbricks

## # ℹ 121 more rows

## # ℹ 37 more variables: rooms <dbl>, memb_assoc <chr>, affect_conflicts <chr>,

## # liv_count <dbl>, no_meals <dbl>, instanceID <chr>, owns_bicycle <dbl>,

## # owns_television <dbl>, owns_solar_panel <dbl>, owns_table <dbl>,

## # owns_cow_cart <dbl>, owns_radio <dbl>, owns_cow_plough <dbl>,

## # owns_solar_torch <dbl>, owns_mobile_phone <dbl>, owns_motorcyle <dbl>,

## # owns_None <dbl>, owns_fridge <dbl>, owns_electricity <dbl>, …Renaming many variables

Let’s say we need to rename a bunch of variables.

One way is to use a named list:

# this follows the pattern new_name = old_name

newnames <- c("no_livestock" = "liv_count",

"no_rooms" = "rooms",

"affect_conflicts" = "ffect_conflicts")

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

rename(any_of(newnames)) %>%

glimpse()## Rows: 131

## Columns: 14

## $ key_ID <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15…

## $ village <chr> "God", "God", "God", "God", "God", "God", "God", …

## $ interview_date <dttm> 2016-11-17, 2016-11-17, 2016-11-17, 2016-11-17, …

## $ no_membrs <dbl> 3, 7, 10, 7, 7, 3, 6, 12, 8, 12, 6, 7, 6, 10, 5, …

## $ years_liv <dbl> 4, 9, 15, 6, 40, 3, 38, 70, 6, 23, 20, 20, 8, 20,…

## $ respondent_wall_type <chr> "muddaub", "muddaub", "burntbricks", "burntbricks…

## $ no_rooms <dbl> 1, 1, 1, 1, 1, 1, 1, 3, 1, 5, 1, 3, 1, 3, 2, 1, 1…

## $ memb_assoc <chr> NA, "yes", NA, NA, NA, NA, "no", "yes", "no", "no…

## $ affect_conflicts <chr> NA, "once", NA, NA, NA, NA, "never", "never", "ne…

## $ no_livestock <dbl> 1, 3, 1, 2, 4, 1, 1, 2, 3, 2, 2, 2, 3, 3, 3, 4, 1…

## $ items_owned <chr> "bicycle;television;solar_panel;table", "cow_cart…

## $ no_meals <dbl> 2, 2, 2, 2, 2, 2, 3, 2, 3, 3, 2, 3, 2, 3, 2, 3, 2…

## $ months_lack_food <chr> "Jan", "Jan;Sept;Oct;Nov;Dec", "Jan;Feb;Mar;Oct;N…

## $ instanceID <chr> "uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef", "uui…Note that "affect_conflicts" = "ffect_conflicts" didn’t do anything,

as there is no ffect_conflicts variable.

any_of() just ignored any variables not present in the data.

This can be useful in data pipelines using multiple files,

where some files have mistyped variable names.

What if we want to do the same thing to many variables? For example, turning them all to uppercase?

We could use newnames = c(KEY_ID = key_ID, VILLAGE = village)

etc. etc.,

but that’d be extremely tedious.

Instead, there is rename_with(), which takes two arugments:

.fn: A function that takes the variable names of your dataset as an argument..cols: a tidy select statement defining which columns to change. Defaults to all columns.

This is what it’d look like:

## Rows: 131

## Columns: 14

## $ KEY_ID <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15…

## $ VILLAGE <chr> "God", "God", "God", "God", "God", "God", "God", …

## $ INTERVIEW_DATE <dttm> 2016-11-17, 2016-11-17, 2016-11-17, 2016-11-17, …

## $ NO_MEMBRS <dbl> 3, 7, 10, 7, 7, 3, 6, 12, 8, 12, 6, 7, 6, 10, 5, …

## $ YEARS_LIV <dbl> 4, 9, 15, 6, 40, 3, 38, 70, 6, 23, 20, 20, 8, 20,…

## $ RESPONDENT_WALL_TYPE <chr> "muddaub", "muddaub", "burntbricks", "burntbricks…

## $ ROOMS <dbl> 1, 1, 1, 1, 1, 1, 1, 3, 1, 5, 1, 3, 1, 3, 2, 1, 1…

## $ MEMB_ASSOC <chr> NA, "yes", NA, NA, NA, NA, "no", "yes", "no", "no…

## $ AFFECT_CONFLICTS <chr> NA, "once", NA, NA, NA, NA, "never", "never", "ne…

## $ LIV_COUNT <dbl> 1, 3, 1, 2, 4, 1, 1, 2, 3, 2, 2, 2, 3, 3, 3, 4, 1…

## $ ITEMS_OWNED <chr> "bicycle;television;solar_panel;table", "cow_cart…

## $ NO_MEALS <dbl> 2, 2, 2, 2, 2, 2, 3, 2, 3, 3, 2, 3, 2, 3, 2, 3, 2…

## $ MONTHS_LACK_FOOD <chr> "Jan", "Jan;Sept;Oct;Nov;Dec", "Jan;Feb;Mar;Oct;N…

## $ INSTANCEID <chr> "uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef", "uui…The variables names are used as the argument to toupper(),

which returns them in upper case.

But what if we need to provide more arguments?

Let’s say we want to append _0 to all columns,

except the ID columns,

to indicate this is baseline data.

We use paste0() which takes two arguments,

the variable name and string we wish to append:

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

rename_with(.fn = ~paste0(.x, "_0"), .cols = !key_ID) %>%

glimpse()## Rows: 131

## Columns: 14

## $ key_ID <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, …

## $ village_0 <chr> "God", "God", "God", "God", "God", "God", "God"…

## $ interview_date_0 <dttm> 2016-11-17, 2016-11-17, 2016-11-17, 2016-11-17…

## $ no_membrs_0 <dbl> 3, 7, 10, 7, 7, 3, 6, 12, 8, 12, 6, 7, 6, 10, 5…

## $ years_liv_0 <dbl> 4, 9, 15, 6, 40, 3, 38, 70, 6, 23, 20, 20, 8, 2…

## $ respondent_wall_type_0 <chr> "muddaub", "muddaub", "burntbricks", "burntbric…

## $ rooms_0 <dbl> 1, 1, 1, 1, 1, 1, 1, 3, 1, 5, 1, 3, 1, 3, 2, 1,…

## $ memb_assoc_0 <chr> NA, "yes", NA, NA, NA, NA, "no", "yes", "no", "…

## $ affect_conflicts_0 <chr> NA, "once", NA, NA, NA, NA, "never", "never", "…

## $ liv_count_0 <dbl> 1, 3, 1, 2, 4, 1, 1, 2, 3, 2, 2, 2, 3, 3, 3, 4,…

## $ items_owned_0 <chr> "bicycle;television;solar_panel;table", "cow_ca…

## $ no_meals_0 <dbl> 2, 2, 2, 2, 2, 2, 3, 2, 3, 3, 2, 3, 2, 3, 2, 3,…

## $ months_lack_food_0 <chr> "Jan", "Jan;Sept;Oct;Nov;Dec", "Jan;Feb;Mar;Oct…

## $ instanceID_0 <chr> "uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef", "u…In this case, we specify the function as a

a purrr-style inline anonymous function

(i.e. preceded by a ~), and we supply the variable names as .x.

(This all works the same as across() above.)

Now, let’s replace all instances of membrs or memb in variable names with

members (so no_membrs becomes no_members,

and memb_assoc becomes members_assoc.

For this I will use gsub(),

which takes three arguments:

- A pattern (expressed as a regular expression) to look for;

- a replacement for all matches of the pattern; and,

- the data to look in (in this case the varaible names).

read_csv(here("data/SAFI_clean.csv"), na = "NULL") %>%

rename_with(.fn = ~gsub("membrs|memb", "members",.x)) %>%

glimpse()## Rows: 131

## Columns: 14

## $ key_ID <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15…

## $ village <chr> "God", "God", "God", "God", "God", "God", "God", …

## $ interview_date <dttm> 2016-11-17, 2016-11-17, 2016-11-17, 2016-11-17, …

## $ no_members <dbl> 3, 7, 10, 7, 7, 3, 6, 12, 8, 12, 6, 7, 6, 10, 5, …

## $ years_liv <dbl> 4, 9, 15, 6, 40, 3, 38, 70, 6, 23, 20, 20, 8, 20,…

## $ respondent_wall_type <chr> "muddaub", "muddaub", "burntbricks", "burntbricks…

## $ rooms <dbl> 1, 1, 1, 1, 1, 1, 1, 3, 1, 5, 1, 3, 1, 3, 2, 1, 1…

## $ members_assoc <chr> NA, "yes", NA, NA, NA, NA, "no", "yes", "no", "no…

## $ affect_conflicts <chr> NA, "once", NA, NA, NA, NA, "never", "never", "ne…

## $ liv_count <dbl> 1, 3, 1, 2, 4, 1, 1, 2, 3, 2, 2, 2, 3, 3, 3, 4, 1…

## $ items_owned <chr> "bicycle;television;solar_panel;table", "cow_cart…

## $ no_meals <dbl> 2, 2, 2, 2, 2, 2, 3, 2, 3, 3, 2, 3, 2, 3, 2, 3, 2…

## $ months_lack_food <chr> "Jan", "Jan;Sept;Oct;Nov;Dec", "Jan;Feb;Mar;Oct;N…

## $ instanceID <chr> "uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef", "uui…